Researchers from MIT (The Massachusetts Institute of Technology) created a new artificial intelligence generating people's faces based on their voices. You probably think it’s impossible and straight out of science fiction, don’t you? But the developers have already demonstrated the outcomes of their work – the system draws actual portraits. Let's learn more about the idea.

What is Speech2Face?

Speech2Face (S2F) is a neural network or an AI algorithm trained to determine the gender, age, and ethnicity of a speaker by their voice. This system is also able to recreate an approximate portrait of a person from a speech sample.

Source: https://arxiv.org/pdf/1905.09773.pdf

Source: https://arxiv.org/pdf/1905.09773.pdf

How does Speech2Face work?

To train Speech2Face researchers used more than a million YouTube videos. Analyzing the images in the database, the system revealed typical correlations between facial traits and voices and learned to detect them.

S2F includes two main components:

- A voice encoder. As input, it takes a speech sample computed into a spectrogram - a visual representation of sound waves. Then the system encodes it into a vector with determined facial features.

- A face decoder. It takes the encoded vector and reconstructs the portrait from it. The image of the face is generated in standard form (front side and neutral by expression).

Source: https://arxiv.org/pdf/1905.09773.pdf

Source: https://arxiv.org/pdf/1905.09773.pdf

Why does Speech2Face work?

MIT’s research team explains in detail why the idea of recreating a face just by voice works:

“There is a strong connection between speech and appearance, part of which is a direct result of the mechanics of speech production: age, gender (which affects the pitch of our voice), the shape of the mouth, facial bone structure, thin or full lips—all can affect the sound we generate”.

Testing and results assessment

Speech2Face was tested using qualitative and quantitative metrics. Scientists compared the traits of people in a video with their portraits created by voice. To evaluate the results researchers constructed a classification matrix.

Source: https://arxiv.org/pdf/1905.09773.pdf

Source: https://arxiv.org/pdf/1905.09773.pdf

Demographic attributes

It turned out that S2F copes successfully with pinpointing gender. However, it is far off determining age correctly and, on average, is mistaken for ± 10 years. Also, it was found that the algorithm recreates Europeans’ and Asians’ features best of all.

Source: https://arxiv.org/pdf/1905.09773.pdf

Source: https://arxiv.org/pdf/1905.09773.pdf

Craniofacial attributes

Using a face attribute classifier, the researchers revealed that several craniofacial traits were reconstructed well. The best match was found for the nasal index and nose width.

Source: https://arxiv.org/pdf/1905.09773.pdf

Source: https://arxiv.org/pdf/1905.09773.pdf

The impact of input audio duration

The researchers decided to increase the length of voice recordings from 3 seconds to 6. This measure significantly improved the S2F reconstruction results, since the facial traits were captured better.

Source: https://arxiv.org/pdf/1905.09773.pdf

Source: https://arxiv.org/pdf/1905.09773.pdf

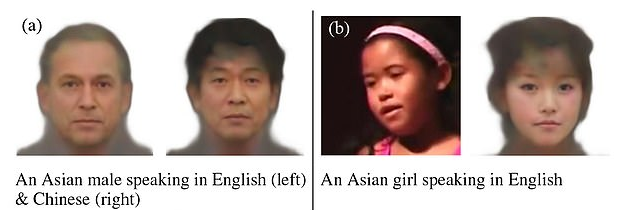

Effect of language and accent

When S2F listened to an Asian male talking in English and Chinese, it reconstructed two different faces, one Asian and the second European. However, the neural network successfully identified an Asian girl speaking English and recreated a face with oriental features. So in case of language variations mixed performance was observed.

Source: https://arxiv.org/pdf/1905.09773.pdf

Source: https://arxiv.org/pdf/1905.09773.pdf

Mismatches and Feature similarity

The researchers emphasize that S2F doesn’t reveal accurate representations of any individual but creates “average-looking faces.” So it can’t produce the persons’ image similar enough that you'd be able to recognize someone. MIT’s researchers give explanations to this:

“The goal was not to predict a recognizable image of the exact face, but rather to capture dominant facial traits of the person that are correlated with the input speech.”

Given that the system is still at the initial stage, it’s occasionally mistaken. Here are examples of some fails:

Source: https://arxiv.org/pdf/1905.09773.pdf

Source: https://arxiv.org/pdf/1905.09773.pdf

Ethical questions

MIT’s researchers outspoken some ethical considerations about Speech2Face:

“The training data we use is a collection of educational videos from YouTube, and does not represent equally the entire world population … For example, if a certain language does not appear in the training data, our reconstructions will not capture well the facial attributes that may be correlated with that language.”

So to enhance the Speech2Face results, researchers need to collect a complete database. It should represent people of different races, nationalities, levels of education, places of residence and therefore accents and languages.

Use Case - Speech-to-cartoon

S2F-made portraits could be used for creating personalized cartoons. For example, researchers did it with the help of GBoard app.

Source: https://arxiv.org/pdf/1905.09773.pdf

Source: https://arxiv.org/pdf/1905.09773.pdf

Such cartoon-like faces can be used as a profile picture during a phone or video call. Especially, if a speaker prefers not to share a personal photo.

Also, animated faces can be directly assigned to voices used in virtual assistants and other smart home systems & devices.

Potential Use Cases

If the Spech2Face is trained further it could be useful for:

- Security forces and law enforcement agencies. Exact speech-to-face reconstructions can help to catch the criminals. For example, in the case of a masked bank robbery, phoned-in threats from terrorists or extortions from kidnappers.

- Media and motion picture industry. This technology can help VX (viewer experience) designers to build mind-blowing effects.

Conclusion

Presently Speech2Face appears to be an effective gender, age, and ethnicity classifier by voice. But this technology is also notable as it takes AI to another level. Perhaps, after further training, S2F will be able to predict the exact person’s face by voice. So it’s safe to say that we are witnessing a great breakthrough in the tech world and it’s exciting.

Rate this article

Our Clients' Feedback

Belitsoft has been the driving force behind several of our software development projects within the last few years. This company demonstrates high professionalism in their work approach. They have continuously proved to be ready to go the extra mile. We are very happy with Belitsoft, and in a position to strongly recommend them for software development and support as a most reliable and fully transparent partner focused on long term business relationships.

Global Head of Commercial Development L&D at Technicolor