While a generic LLM like OpenAI ChatGPT may have some familiarity with your domain, key information specific to your corporation, like internal documentation or emails, is not available to them by default. Therefore, such LLMs lack the necessary context for providing the best responses. It is possible to train general-purpose LLMs on your business-specific use cases. LLM developers overcome the limitations of generic models by steering them toward the most relevant outputs for your custom needs. They train large language models exclusively on your enterprise's data sets to generate tailored responses.

Types of LLM Training

LLM Fine-Tuning

Fine-tuning is the training of a general-purpose LLM that has already been pre-trained. Such an LLM has generic knowledge but does not perform well on domain-specific tasks without further training.

Fine-tuning feeds an LLM with domain-specific labeled examples to allow it to complete domain-specific tasks without errors and hallucinations. It's a customization of a general-purpose LLM with respect to the expertise area. Such an LLM can better recognize specific nuances, like legal jargon, medical terms or individual preferences of users.

Fine-tuning is often split into training, validation, and test sets.

The labeled dataset should be relevant to the specific task the LLM must learn to perform, be of high quality (without inconsistent data and duplicates), have sufficient quantity, and be in the form of inputs and outputs.

A pre-trained LLM usually consists of different layers (each one processes the input in its own way). For example, ChatGPT-4 has 120 layers. When fine-tuning such an LLM, layers representing general knowledge are kept unchanged, while only the top and later layers are modified according to the task-specific data.

The goal is to make the model’s predictions as close as possible to the desired output (the validation dataset is used to measure this). Both automated metrics (BLEU and ROUGE scores) and human evaluations are used to get a 360° view of a model's performance.

What do machine learning engineers do in the process of the LLM fine-tuning? They focus on finding:

- the optimal learning rate (the speed at which ML algorithms adjust some of their parameters automatically) to avoid too high or too low rates.

- the right batch size (the number of training examples of the algorithm processes before trying to improve the model). This mitigates overgeneralization (when it ignores exceptions or variations), and overfitting (when it memorizes without understanding the underlying principles).

- the right number of epochs (training iterations) to ensure the model does not train for so long that it begins to overfit.

They also use regularization techniques to discourage the model from giving too much importance to one or a few features (characteristics) of the data, and to encourage the consideration of all features more evenly to improve performance on new, unseen data.

Retrieval-Augmented Training of Large Language Models

Fine-tuned LLMs may become outdated if there is a lot of dynamic data in the domain, as they are tied to the facts in their initial training datasets. They cannot acquire new information and thus may respond inaccurately. Fine-tuning requires a lot of labeled data, and in general, the overall cost of fine-tuning may be relatively higher compared to Retrieval-Augmented Generation (RAG).

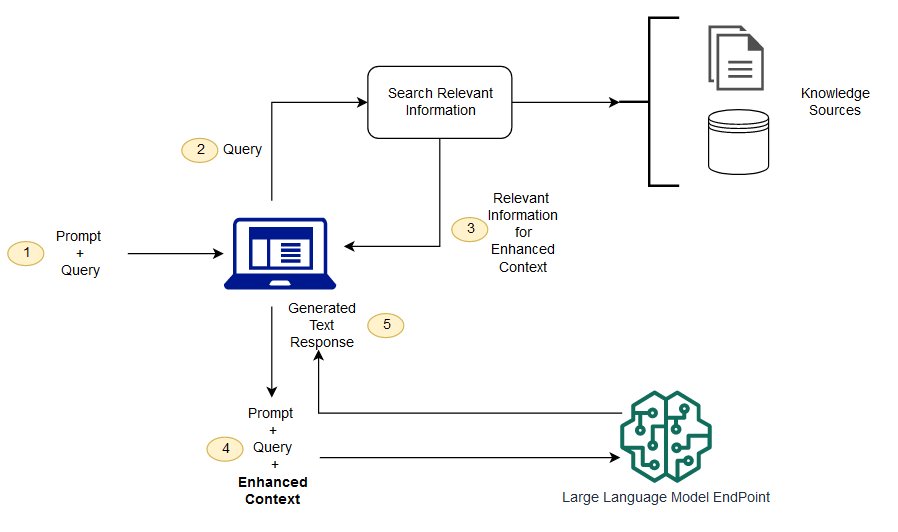

RAG is an approach that improves existing language models by integrating retrieval capabilities directly into the generation process.

From a user perspective, RAG may be referred to as a search engine with a built-in content writer. RAG architecture is used to increase the performance of an LLM by merging the standard generative capabilities with retrieval mechanisms. It works as follows: searching vast external knowledge bases, finding relevant information for a given prompt, and generating new text based on this information.

Machine learning engineers often implement RAG by relying on a vector database. The knowledge base is converted into vectors to be stored in this database. When a user submits a query to the LMM, it’s also converted into a vector. The retrieval component searches the vector database for similar vectors. The most similar information is combined with the user query. This forms the augmented query that is ready to be fed into an LLM to let it generate an up-to-date response.

RAG prevents the problem of 'best-guess' assumptions and generates factually correct and unbiased responses because it adapts to situations where the information has changed over time. Since it generates information from the retrieved data, it becomes nearly impossible for it to produce fabricated responses. The source of an LLM’s answer can easily be identified based on the references, which are essential for quality assurance.

Chatbots with RAG capabilities efficiently retrieve relevant information from an organization's instruction manuals and technical documents (for customer support), up-to-date medical documents and clinical guidelines (for medics), from an institution's study materials according to specific curriculum requirements (for educational institutions), and from a repository of former depositions and legal decisions (for legal professionals). RAG also improves language translation in specialized fields.

LLM Training Stages

Data Sources Preparation

The goal here is to find and prepare data that is sufficient in volume, relevant and focused directly on the target use cases, and relatively high in quality (ready to clean).

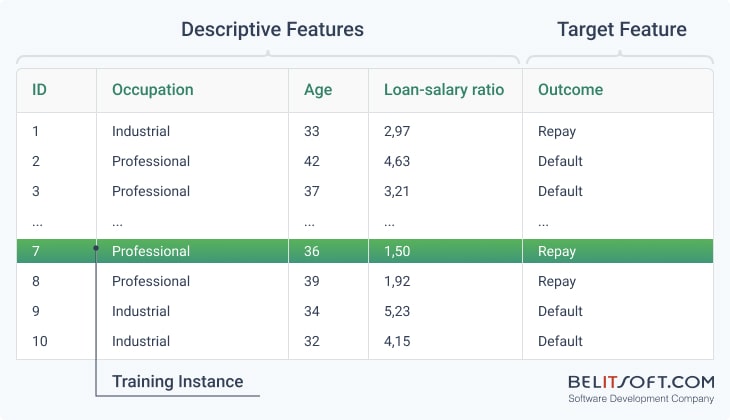

Example of a labeled dataset with descriptive features and a target feature

Data Cleaning

At this stage, machine learning engineers remove corrupted data from training data sets, reduce duplicate copies of the same data to a single one, and complete (when feasible) incomplete data by adding missing information. OpenRefine and a variety of proprietary data quality and cleaning tools are available for this purpose.

Data Formatting

Models recognize patterns and input-output relationships better if the training data is structured based on specified guidelines. Examples of inputs are customer questions, and outputs are the support team's responses. Machine learning engineers may reformat the source data using JSON. They use custom scripts to expedite the process and manually tweak and clean up where it’s necessary.

Adjusting Parameters

Transformer-based deep learning frameworks are used to train models, and parameters are customized for these models.

Tweaking parameters of how the LLM interprets data is a way to guide it toward behaving in desired ways. The AI team knows exactly which parameters to customize and which ones not to, using methods like LoRA, as well as the best way to customize them. They adjust model weights to indicate the relationship strength between data within a training set.

LLM Training Process

Machine learning engineers run code that learns from the custom data using previously set parameters. The process may be finished after either hours or weeks, depending on the size of the data.

They train the LLM in a three-stage process that includes self-supervised learning, supervised learning, and reinforcement learning.

First, the model reads a lot of text in your domain on its own. It learns how language works and starts to guess what words/sentences might come next.

Then, the model is given examples by our data scientists to learn from. After this, it can follow instructions and do well on new tasks it hasn't seen before.

Finally, the model's answers are graded by our staff to teach the model which answers are preferred.

Then the trained model is tested. The goal is to have an LLM that is accurate across your domain, consistent, uses natural language, performs well in real-world tasks like problem-solving, and answers factual questions without hallucinations.

In the end, the LLM is being integrated with the appropriate real-life application.

Rate this article

Our Clients' Feedback

Belitsoft has been the driving force behind several of our software development projects within the last few years. This company demonstrates high professionalism in their work approach. They have continuously proved to be ready to go the extra mile. We are very happy with Belitsoft, and in a position to strongly recommend them for software development and support as a most reliable and fully transparent partner focused on long term business relationships.

Global Head of Commercial Development L&D at Technicolor